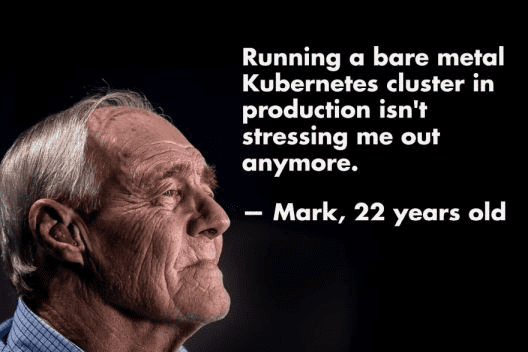

You don’t want to be like Mark, so let’s read on.

Kubernetes is an open source system to deploy, scale, and manage containerized applications anywhere. Kubernetes scheduler is a core component of Kubernetes which assigns Pods to Nodes.

This post provides detailed model of the Kubernetes Scheduler, and assumes that the reader is familiar with the basics of Kubernetes.

Let’s get started?

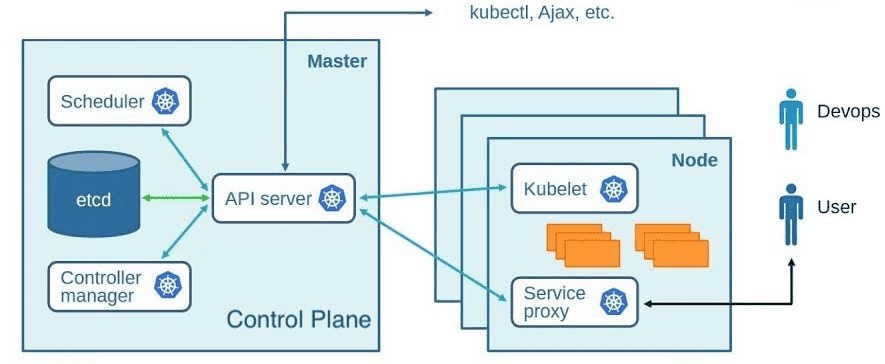

Before we jump into the underpinnings of Kubernetes Scheduler, let’s take a look at Kubernetes’ architecture and where does the scheduler fit in.

Kubernetes architecture

Here’s a diagram of a Kubernetes cluster with all the components tied together.

Control Plane

It is responsible for managing Kubernetes object states and responding to changes in the cluster.

Control Plane components

- API server acts as the front end for the Kubernetes control plane. It provides CRUD interface for querying and modifying the cluster state over a RESTful API.

- etcd is a consistent and highly-available key value store used as backing store for all cluster data.

- Scheduler watches for newly created Pods with no assigned node, and selects a node for them to run on.

- Controller manager runs controller processes to manage resources like replication, deployment, service, endpoint, namespace, etc. Internally a controller runs a reconciliation loop, which reconciles the actual state with the desired state (specified in the resource’s spec section).

All the communications between various control plane components happens through API server. That would mean, for example, a scheduler cannot talk to etcd directly, it has to go through the API server.

Node components

These run on every worker node and manage running pods.

- Kubelet is a component that ensures containers are running in a Pod.

- Service proxy is a network proxy that ensures clients can connect to the services defined through the Kubernetes API.

- Container runtime is responsible for running containers and supports several container runtimes like Docker, rkt, containerd, etc.

Scheduler

It’s responsible for scheduling pods onto nodes.

A scheduler waits for newly created pods through the API server’s watch mechanism and assign a node to each new pod that doesn’t already have the node set.

Watch mechanism?

etcd implements a watch feature, which provides an event-based interface for asynchronously monitoring changes to keys.

Whenever a key gets updated, its watchers get notified.

It’s important to note that the Scheduler has no role in running the pod. Kubelet (a component in the worker node) has the job of running a pod.

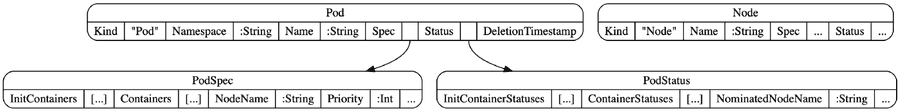

All that the Scheduler does is update the pod definition through the API server. This would mean updating the PodSpec.NodeName in the Pod object example in the image above.

The API server then notifies the Kubelet (through the watch mechanism) that the pod has been scheduled. As soon as the Kubelet on the target node sees the pod has been scheduled to its node, it creates and runs the pod’s containers.

How does Scheduler figure out which Node to use for a Pod?

Given a pool of available nodes, there are techniques ranging between simple and advanced, which could be applied for node selection.

Example of a Simple technique:

Randomly select an available node.

Example of an Advanced technique:

Use machine learning to anticipate what kind of pods are about to be scheduled in the next minutes or hours and schedule pods to maximize future hardware utilization without requiring any rescheduling of existing pods.

What is the default scheduling algorithm?

- Node Filtering: Filtering the list of all nodes to obtain a list of acceptable nodes the pod can be scheduled to.

-

Node Priority Calculation: Prioritizing the acceptable nodes and choosing the best one. If multiple nodes have the highest score, round-robin is used to ensure pods are deployed across all of them evenly.

Finding acceptable nodes

To determine which nodes are acceptable for the pod, the Scheduler passes each node through a list of configured predicate functions. These check various things such as:

Can the node fulfill the pod’s requests for hardware resources?

Is the node running out of resources (is it reporting a memory or a disk pressure condition)?

If the pod requests to be scheduled to a specific node (by name), is this the node?

Does the node have a label that matches the node selector in the pod specification (if one is defined)?

If the pod requests to be bound to a specific host port, is that port already taken on this node or not?

If the pod requests a certain type of volume, can this volume be mounted for this pod on this node, or is another pod on the node already using the same volume?

Does the pod tolerate the taints of the node?

Does the pod specify node and/or pod affinity or anti-affinity rules? If yes, would scheduling the pod to this node break those rules?

ALL of these checks must pass for the node to be eligible to host the pod. After performing these checks on every node, the Scheduler ends up with a subset of the nodes. Any of these nodes could run the pod, because they have enough available resources for the pod and conform to all requirements which were specified in the pod definition.

Now, we have a list of acceptable nodes, let’s find the best among them.

Selecting the best node

The definition of “best” depends largely on the context. Below mentioned are examples of a few criteria that may be applied:

- If we have a two-node cluster, then both nodes are eligible. But one is already running 5 pods, while the other, for some reason, isn’t running any pods at the moment. Then, it would be a trivial decision to select the second node.

-

Or if these two nodes are provided by the cloud infrastructure, it may be better to schedule the pod to the first node and relinquish the second node back to the cloud provider to save money $$$.

Sure Linus!

Have a look at the Run function in the scheduler.go in the official Kubernetes repository:

// Run begins watching and scheduling. It waits for cache to be

// synced, then starts a goroutine and returns immediately.

func (sched *Scheduler) Run() {

if !sched.config.WaitForCacheSync() {

return

}

go wait.Until(

sched.scheduleOne,0,sched.config.StopEverything

)

}A process that runs infinitely sched.scheduleOne

// scheduleOne does the entire scheduling workflow for a single pod.

// It is serialized on the scheduling algorithm's host fitting.

func (sched *Scheduler) scheduleOne() {

pod := sched.config.NextPod()

if pod.DeletionTimestamp != nil {

// Check if pod is deleted

...

return

}

// Synchronously attempt to find a fit for the pod.

start := time.Now()

suggestedHost, err := sched.schedule(pod)

...

}NextPod function pops a pod from the podQueue. Here’s a link to the actual code snippet.

func (f *ConfigFactory) getNextPod() *v1.Pod {

for {

pod := cache.Pop(f.podQueue).(*v1.Pod)

if f.ResponsibleForPod(pod) {

glog.V(4).Infof("About to try and schedule pod %v", pod.Name)

return pod

}

}

}Some notes on the design of Scheduler

From one of the earlier sections about Controller manager, we have

Internally a controller runs a reconciliation loop, which reconciles the actual state with the desired state (specified in the resource’s spec section).

K8s uses controller patterns to manage a cluster’s desired state. Here’s a link about the design guidelines for writing controllers.

That’s all for this post, folks!